Today, I’ll research what it takes to programmatically query Google to determine a domain’s ranking position for a specific keyword. Understanding how to gather keyword rankings without triggering a Google block is the goal.

I’ll break this article into the following sections:

- The Top Methods Used

- Why These Methods Matter

In the first section, we explore the strategies that SEO tools use to track keyword rankings. In the second section, we discuss why it’s necessary to use these sophisticated methods.

Table of Contents

Section 1: Method

- APIs from Third-Party Providers

- Web Scraping with Proxies

- CAPTCHA Solvers

- Crowdsourced Data Collection

- Rank Tracking via Browser Automation

- Direct Partnerships with Search Engines

- Geolocation & Device-Specific Rank Tracking

- Search Console Data for Historical Tracking

- SERP Feature Tracking

- Reverse Engineering Algorithms

- SERP Data Aggregation

- Data Caching

- Overview of Methods

- Top 5 Frequently Asked Questions for Method

Section 2: Why?

- Avoiding Google’s Bot Detection Mechanisms

- Geographical and Device-Specific Ranking Variations

- Accurate Tracking Over Time

- Tracking Multiple SERP Features

- Volume of Data

- Real-Time Monitoring and Competitive Analysis

- APIs Are Not Always Sufficient

- Legal and Compliance Considerations

- Cost Efficiency

- Overview of Why?

- Top 5 Frequently Asked Questions for Why?

Section 1: Method

SEO keyword tracking service providers use a variety of methods to query Google and other search engines to track keyword rankings. These methods aim to provide accurate ranking data while minimizing the risk of being blocked by search engines or triggering CAPTCHA challenges. The following are some of the common methods:

1. APIs from Third-Party Providers

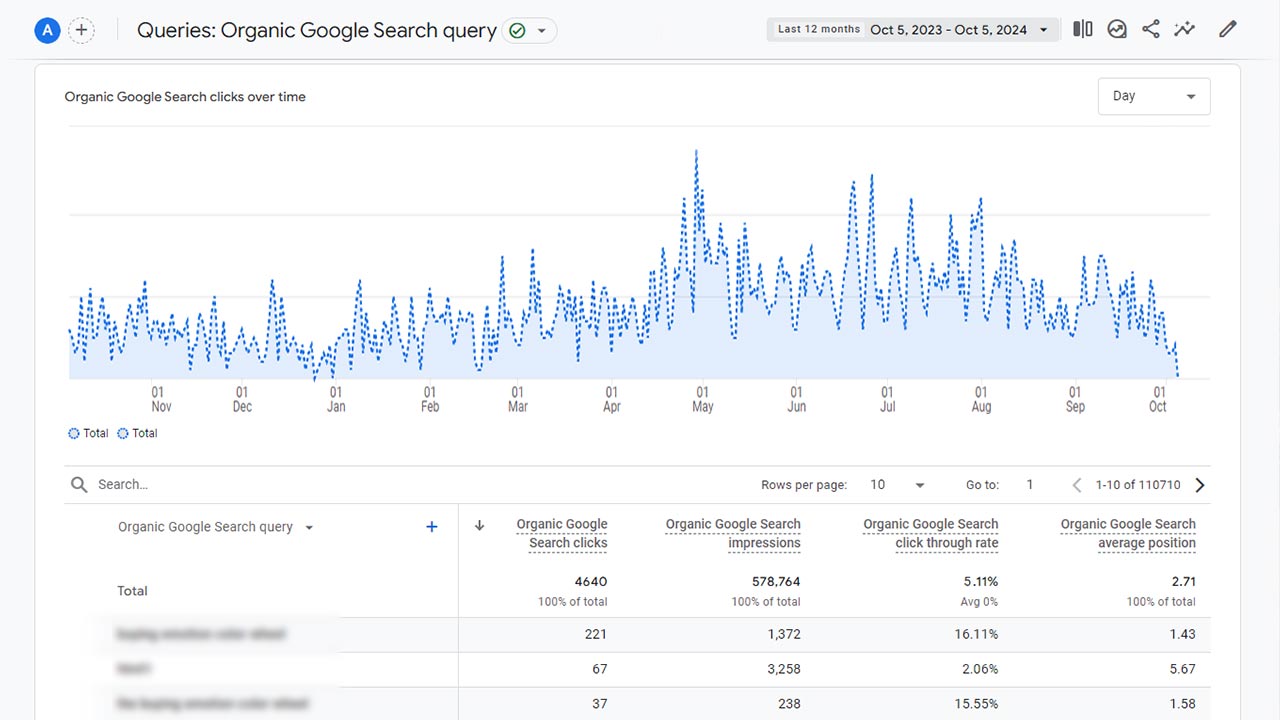

- Google Search Console API: Many SEO tools use Google’s official Search Console API, which allows access to search performance data directly from Google. It provides keyword positions, impressions, and click data over time, but is limited to the keywords that Google reports for your own website.

- SERP API Providers: Several third-party services provide SERP data through APIs. These services have already built robust solutions for querying search engines without triggering penalties. They provide accurate ranking data for keywords, including geographic and device-specific rankings. Popular SERP API providers include:

- SERP API: Offers real-time SERP data and supports multiple search engines.

- DataForSEO: Provides ranking data for keywords, SERP features, and more.

- SEMrush API: Offers keyword ranking data along with a suite of SEO tools.

- Ahrefs API: Provides ranking and backlink data.

- Moz API: Offers keyword tracking and domain analysis.

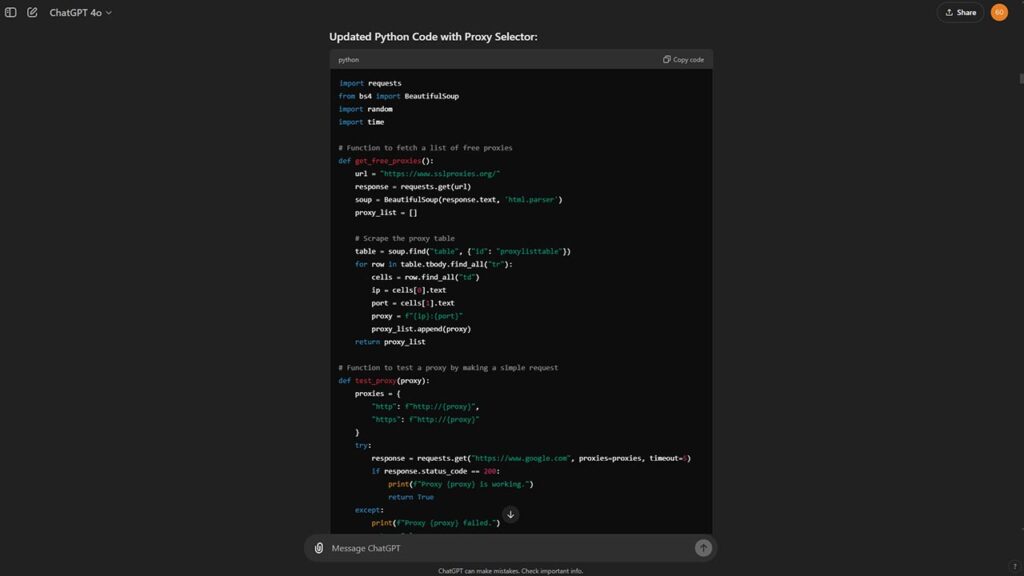

2. Web Scraping with Proxies

- Custom Web Scraping: Some providers develop their own web scraping systems to extract ranking data directly from search engine results pages (SERPs). Since Google may block scrapers if too many requests come from the same IP or if they are too frequent, providers often combine several techniques to mitigate this:

- Rotating Proxies: SEO tools use rotating proxies to distribute search requests across multiple IP addresses, mimicking requests from different users or locations. This helps avoid Google’s rate-limiting and CAPTCHA challenges.

- Geolocation Targeting: They may use proxies located in specific regions to track rankings from different countries or cities, as rankings can vary by geographic location.

- Headless Browsers: Tools like Puppeteer or Selenium allow scraping to mimic real user behavior more effectively, such as loading JavaScript-heavy content and interacting with SERP features like dropdowns or pagination.

3. CAPTCHA Solvers

- To overcome CAPTCHA challenges presented by Google, some SEO tools integrate automated CAPTCHA-solving services like 2Captcha or AntiCaptcha. These services solve CAPTCHA puzzles in real time and allow continued access to the search engine.

4. Crowdsourced Data Collection

- Some tools use a network of real users or devices (crowdsourcing) to collect keyword ranking data. These users query Google naturally on their local devices, and the results are aggregated into a database that is later used by the service. This method ensures accurate and “real” data without risking bot detection.

5. Rank Tracking via Browser Automation

- Headless Browsers: Tools may use headless browsers like Puppeteer or Selenium to simulate real user behavior when visiting Google SERPs. By mimicking human interaction with search engines, they can extract keyword rankings while avoiding detection as bots.

- Click Simulation: Some advanced systems simulate clicking on certain links in the SERPs to monitor user interactions or evaluate rankings for specific features, such as featured snippets or local results.

6. Direct Partnerships with Search Engines (Rare)

- Although rare for smaller SEO providers, some large platforms may establish partnerships with search engines, gaining access to exclusive data. This is less common with Google but may occur with other search engines like Bing.

7. Geolocation & Device-Specific Rank Tracking

- Mobile vs. Desktop Tracking: Rankings can vary significantly between desktop and mobile results, so many providers track rankings separately for mobile and desktop using device emulation or real-device tracking (especially on mobile SERPs).

- Localized Tracking: Keyword rankings often vary by location, so SEO tools may use IP-based geolocation or proxies to query search engines from specific geographic areas. This allows tracking of localized results, which is particularly important for local SEO.

8. Search Console Data for Historical Tracking

- Google Search Console: Providers may offer integrations with Search Console to track historical keyword performance. This data isn’t always real-time and doesn’t provide full SERP rankings, but it gives insights into the average position, clicks, and impressions for queries.

9. SERP Feature Tracking

- In addition to regular keyword ranking tracking, SEO services also track SERP features like:

- Featured Snippets

- Local Packs

- Knowledge Panels

- Video Carousels

- Images

- People Also Ask

- They use the same scraping methods but look for specific HTML structures that indicate the presence of these features.

10. Reverse Engineering Algorithms

- Data Models and Estimation: Some platforms use machine learning models or data analysis techniques to estimate keyword positions based on known ranking factors. While not directly querying Google, these models attempt to predict movements in SERP rankings using patterns derived from previous data.

11. SERP Data Aggregation

- SERP Aggregators: Some keyword tracking services may not query Google directly for each user but instead aggregate SERP data from multiple users and sources. This aggregated data is often updated frequently and gives a good indication of keyword rankings across different geographies, devices, and languages.

12. Data Caching

- Cached Results: Many SEO tools store historical data and cache search engine results to provide fast insights without querying Google for every request. This limits the number of live queries while still providing users with near-real-time data.

Overview of Methods

- APIs: Use official or third-party APIs to access keyword ranking data.

- Scraping: Use web scraping techniques with rotating proxies and user-agent rotation to gather SERP data.

- Crowdsourced Data: Gather data from real users or devices to bypass rate limits and capture accurate results.

- Geolocation and Device-Specific Data: Use proxies or real devices to capture location-based and mobile/desktop-specific results.

- Headless Browsers: Use headless browsers to mimic user behavior and extract data from complex SERP layouts.

- CAPTCHA Solving: Use CAPTCHA solving services to handle automated challenges.

These techniques are employed by major SEO platforms like SEMrush, Ahrefs, Moz, and others to provide accurate keyword rankings tracking services.

Top 5 Frequently Asked Questions for Methods

Section 2: Why?

SEO tool providers need to use a variety of sophisticated methods for keyword rankings tracking primarily because of the complexity and restrictions imposed by search engines like Google. Here are the main reasons why SEO tool providers rely on methods like APIs, scraping with proxies, CAPTCHA solvers, and more:

1. Avoiding Google’s Bot Detection Mechanisms

- Google’s anti-scraping measures: Google employs advanced bot detection techniques to prevent automated scraping of search results. These include:

- CAPTCHAs: Google may display CAPTCHAs to verify that a human is performing the search.

- IP Blocking: If too many requests come from the same IP address in a short period, Google might block that IP or throttle the connection.

- Rate Limiting: Google enforces rate limits on automated queries, causing frequent or high-volume requests to be blocked.

- To circumvent these restrictions, SEO providers use rotating proxies, CAPTCHA-solving services, and headless browsers to appear more like real users.

2. Geographical and Device-Specific Ranking Variations

- Localized results: Search engine results vary based on location, and SEO tools need to capture keyword rankings from multiple regions to provide accurate data. For instance, a keyword might rank differently in New York versus London.

- Mobile vs. Desktop rankings: Google’s search algorithms treat mobile and desktop results differently. Tools need to gather data from both device types, and to do so, they may use emulated devices or proxies from different regions.

- Proxy Usage: To simulate searches from different locations, providers use rotating proxies based in the target countries or cities.

3. Accurate Tracking Over Time

- Search results fluctuate: Rankings for a keyword can fluctuate frequently due to algorithm updates, new content, or changes in competition. SEO tool providers need to consistently track these changes to offer accurate, real-time data to their users.

- Consistent scraping: To maintain accuracy, SEO tools rely on data caching to reduce the number of queries made to Google, but they still need to perform regular checks on live SERPs. This requires efficient methods like APIs or scraping that don’t get blocked.

4. Tracking Multiple SERP Features

- SERP feature tracking: In addition to organic rankings, SEO tools often track features like featured snippets, local packs, video carousels, people also ask, etc. Since these features are dynamic and often vary by search intent or location, tool providers need advanced scraping techniques and SERP analysis to monitor them effectively.

- API limitations: Google Search Console and other APIs provide limited insights into these rich features, making web scraping a necessity for comprehensive data collection.

5. Volume of Data

- Handling large volumes of keywords: Some businesses track thousands or even millions of keywords. Manually querying Google is impractical, so automation is required to handle the volume of data. SEO tools need to make frequent and large-scale requests while avoiding detection, hence the need for scalable systems like rotating proxies, headless browsers, and API integrations.

- Parallel requests: By using proxies and distributed scraping, SEO tool providers can query Google simultaneously from multiple IP addresses, making it easier to handle large datasets efficiently.

6. Real-Time Monitoring and Competitive Analysis

- Real-time data: SEO tools often provide real-time or near-real-time updates on keyword positions. To achieve this, they need to query search engines regularly without getting blocked. Techniques like using headless browsers and CAPTCHA solvers help achieve uninterrupted data collection.

- Competitor tracking: Many businesses want to track not just their own rankings but also those of their competitors. SEO tools use scraping and data aggregation methods to collect and analyze competitive rankings across keywords, geographies, and devices.

7. APIs Are Not Always Sufficient

- Google Search Console limitations: Google Search Console only provides ranking data for keywords that result in clicks or impressions for the site it’s connected to. It does not provide comprehensive data for keywords that don’t drive traffic to your site, or for competitor rankings. For full visibility, SEO tool providers rely on third-party SERP APIs or scraping methods.

- Lack of full SERP data: Google Search Console also doesn’t give access to full SERP data, including where competitors rank, or the presence of specific features like rich snippets or paid ads. This makes scraping essential for comprehensive tracking.

8. Legal and Compliance Considerations

- Complying with Google’s terms: While Google discourages scraping of its search results, SEO tool providers try to balance between providing value to users and complying with Google’s terms of service. Many providers prefer using APIs because they are officially sanctioned methods for accessing data, but APIs have limitations in terms of granularity and scope, which leads them to employ scraping and proxies as a supplement.

9. Cost Efficiency

- Cheaper than API subscriptions: For large-scale tracking of millions of keywords, APIs can be expensive, as many charge per keyword or query. Web scraping, though more complex, can be a more cost-effective solution for some providers when combined with intelligent techniques like rotating proxies or headless browser automation.

Overview of Why?

SEO tool providers need to use these methods to ensure they deliver accurate, up-to-date, and reliable data to their users. Google’s preventing abuse and manipulation, managing server load and resource allocation, maintaining compliance with privacy regulations: Automated queries can also access and analyze private user data, such as personalized search results. Google implements strict controls to prevent unauthorized data collection that could infringe on privacy laws, such as the GDPR.

Leave A Comment