Fireside chat with Perplexity Pro AI:

Multimodal AI refers to artificial intelligence systems that can process and integrate multiple types of data or inputs simultaneously, such as text, images, audio, and video. This allows the AI to gain a more comprehensive understanding of complex scenarios, similar to how humans use multiple senses to perceive the world around them.Key aspects of multimodal AI include:

Definition and Functionality

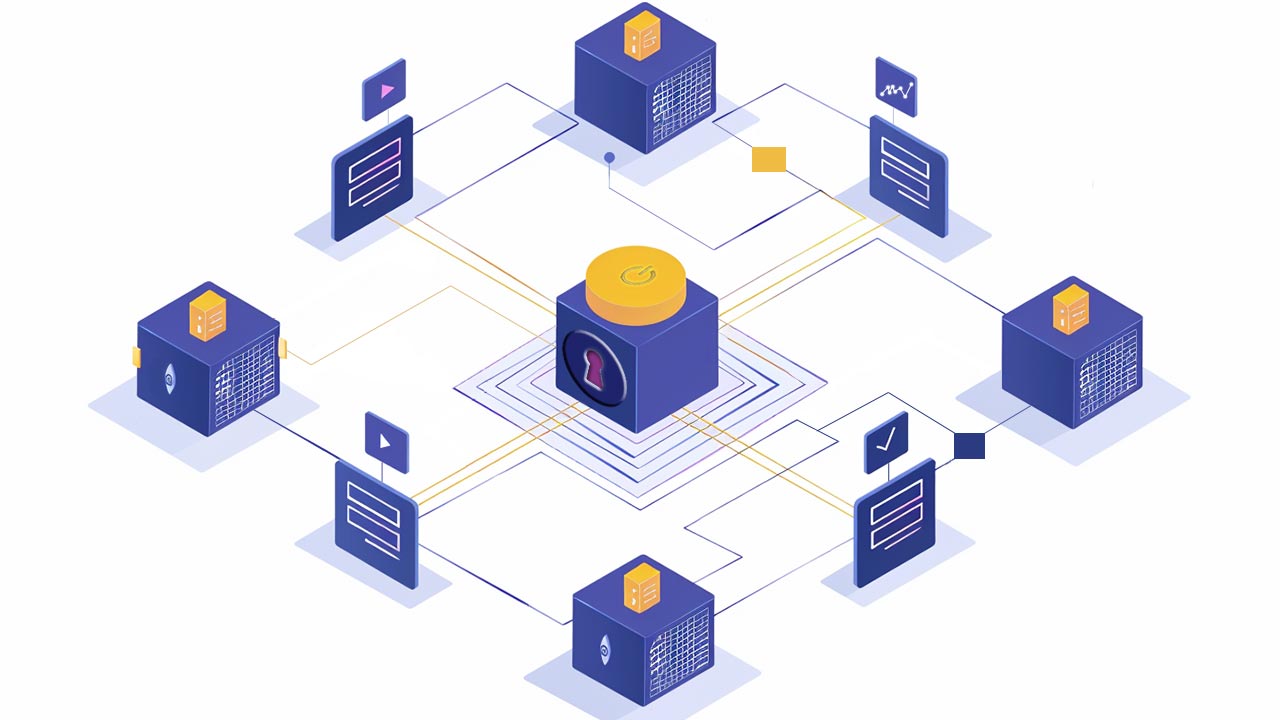

Multimodal AI systems typically consist of three main components:

- Input module: Composed of multiple unimodal neural networks, each handling a different type of data input.

- Fusion module: Integrates and processes information from various data types.

- Output module: Delivers results based on the combined analysis of multiple inputs.

Advantages over Unimodal AI

Multimodal AI offers several benefits compared to traditional unimodal systems:

- Enhanced context understanding: By analyzing multiple data types simultaneously, multimodal AI can form a more nuanced and comprehensive understanding of a given subject or scenario.

- Wider range of tasks: These systems can handle more complex tasks that require integrating different types of information, such as generating images from text descriptions or analyzing video content.

- Improved accuracy and efficiency: The ability to process multiple data types often leads to more accurate and reliable decision-making.

Applications

Multimodal AI is finding applications across various industries:

- Healthcare: Enhancing diagnostics by combining imaging data with patient records and genetic information.

- Autonomous vehicles: Integrating visual, radar, and ultrasonic data for improved navigation and decision-making.

- Customer service: Creating more natural and intuitive interfaces that can understand and respond to multiple types of user inputs.

- Content creation: Generating or manipulating various types of media based on diverse inputs.

Challenges

Despite its potential, multimodal AI faces several challenges:

- Higher data requirements for training

- Complexity in aligning and fusing different types of data

- Ethical concerns and privacy issues related to handling diverse personal data

Popular Models

Some notable multimodal AI models include:

- GPT-4o (OpenAI): Handles text, images, and audio

- Claude 3 (Anthropic): Works with text and images

- Gemini (Google): Processes text, images, audio, and video (image generation currently paused)

- DALL-E 3 (OpenAI): Specializes in text-to-image creation

As multimodal AI continues to evolve, it promises to bring more human-like interactions and understanding to artificial intelligence systems, potentially revolutionizing how we interact with technology across various domains.

Leave A Comment